AWS Security: Use a Bastion Host

Never connect directly to your instances!

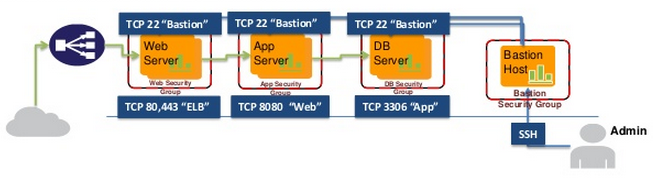

Instead of managing a large list of your office IP’s, your engineer’s IP’s, third-party IP’s, and any on-call road-warrior IP’s that are allowed to connect to servers; simply add a dedicated server I call a jumpbox (also called a “Bastion Host”) to your environment that you first connect to in order to access the rest of your environment. This allows you to proxy all requests through a single IP address. You can even make this extra hop transparent by adding an automatic connection via /etc/profile which keeps an always-open connection to your “jumpbox” / bastion host.

STOP Managing IP access lists!

If you’re an organization concerned with the security of your public facing servers (this should be all of you) you should not be connecting directly to your instances but rather proxying through to a jumpbox / bastion host first and then again to said instances. This allows for simplification of security group and firewall management of your instances because you only need to manage the access to your jumpbox itself since all of your server will have whitelisted traffic from it’s IP.

We use a CloudFormation script to automate the deployment of our security groups to all new instances. If you have not yet used CloudFormation I highly recommend it to automate your server deployments. I initially programmed this via the AWS PHP SDK but this was rudimentary in comparison to CloudFormation which automates error checking, remediation and updates to your environment. Furthermore, we’ll get into using SSH Agent Forwarding which prevents you from having to store your SSH private key on the bastion host itself (never store your private keys where anyone else can access them, this dissolves all advantages) by passing an “-A” flag in your SSH connection to the bastion host. This is simpler than it seems and I’ll provide a quick guide (let me know if you need help with CloudFormation and I’ll put a guide up).

Setup your bastion host

Build out a bastion host for your environment as a tiny instance and let this role be it’s sole purpose. Provide it an Elastic IP so that you can easily whitelist this IP’s access to all of your servers. Once setup, I like to enable SSH keep-alive (server-side) to avoid having to constantly reconnect to your bastion host before getting to your objective server. Simply modify /etc/ssh/sshd_config and set ClientAliveInterval to a value of 60 (sends a keep-alive packet every 60 seconds) and ClientAliveCountMax to a value of 3 (will close connection after 3 failed attempts). Finally, a service sshd reload will repopulate the daemon with these settings.

SSH Agent Forwarding

Do NOT store your private keys on any server, especially if other users have access to it. You have just absolved all user accountability from your team because they can simply emulate your access this way. Instead, you utilize SSH Agent Forwarding which just passes your keys along to the requesting node. To do this, simply pass the -A flag into your ssh connection.

ssh -A <user>@<host>

Recommendations:

- Never setup your SSH keys without a password! (if someone acquires your key, they have unfettered access to your kingdom)

- Utilize CloudFormation for repeatable deployments

- Deploy security groups like SSH, Webservers, Mailservers, Ping and attach these in aggregate to a single instance depending on it’s role.

- Couple this with Chef and you can literally feel the weight slide off your shoulders.

- Centrally manage your users (use LDAP, Chef / Puppet or AD)

- Automate automate automate. As an engineer you should be striving to automate any redundancy in your day to day operation. If your server deployments are taking more than 15-20 minutes from start to finish you’re doing it wrong.

You should never have to modify the security groups of your production instances again, at least for remote access. Now you just modify your jumpbox and avoid management of messy security group rules. If an end-user leaves the company, just disable his access to the jumpbox and you’ve effectively cut off his/her access. No more scripting to recursively remove them from every machine.

This does leave the door open for connecting to non-ssh Windows instances, which I’ll get into in part two.